Many of our model tests will depend on video footage of feeding fish, from which we digitize the 3-D coordinates of attempted prey capture maneuvers to compare against behaviors predicted by the model.

We use a stereo camera setup to get two views of everything the fish does, and digitize it in VidSync like this.

After 33 minutes of digitizing behavior for that particular fish (so far), we can calculate a cloud of points (in green) representing the positions of potential prey, relative to the fish, when the fish detected them:

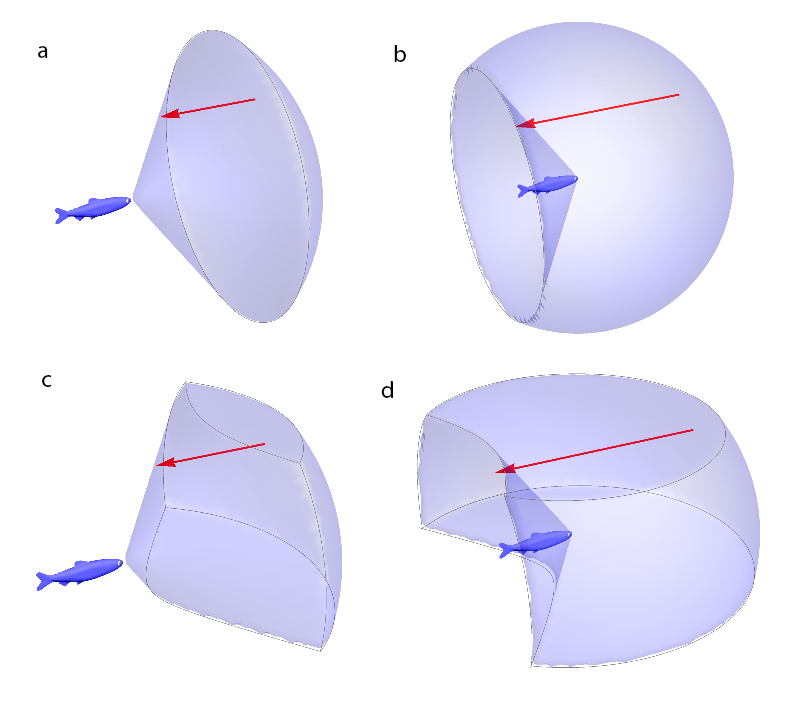

We’ll be comparing various aspects of these reaction fields to predicted volumes that look something like this:

피망뉴맞고는 고스톱 게임 중에서도 많은 사람들이 즐기는 국민게임입니다.

특히나 모바일에서는 남녀노소 누구나

쉽게 즐길 수 있어서 더욱 인기가 많은데요.

저 역시 어렸을 때 부터 아버지랑 같이 많이 했던 추억이 있네요.

지금은 성인이 되어서 친구들과 자주 하고 있답니다.

이번 포스팅에서는 피망뉴맞고 하는 방법에 대해서 소개해드릴게요.

피망뉴맞고 다운로드 링크 알려주세요~

위 사진을 클릭하면 구글 플레이스토어로 이동합니다.

모바일로도 PC버전처럼 이용가능한가요?

네 물론이죠! 피시버전이랑 동일하게 웹사이트 접속해서 로그인 후 즐기시면 됩니다.

PC버전으로는 어떻게 해야하나요?

피망뉴맞고 설치하기 바로가기 위 주소로 접속하셔서 안내대로 진행순서대로 읽으시고

회원가입 하시고 즐겨주시면 됩니다.

1:1문의는 어디서 하나요?

메인화면 하단에 있는 고객센터를 클릭하시면 1:1문의가 가능합니다.

고스톱 치다보면 시간가는 줄 모르고 계속 하게 되는데요.

너무 무리하진 마시고 적당히 쉬면서 하시길 바랍니다.

즐거운 하루 되세요^^